Turing Uncomputability

“As Leibniz suggested, we appear to live in the best of all possible worlds, where the computable functions make life predictable enough to be survivable ...

“As Leibniz suggested, we appear to live in the best of all possible worlds, where the computable functions make life predictable enough to be survivable, while the uncomputable functions make life (and mathematical truth) unpredictable enough to remain interesting, no matter how far computers continue to advance”—George Dyson

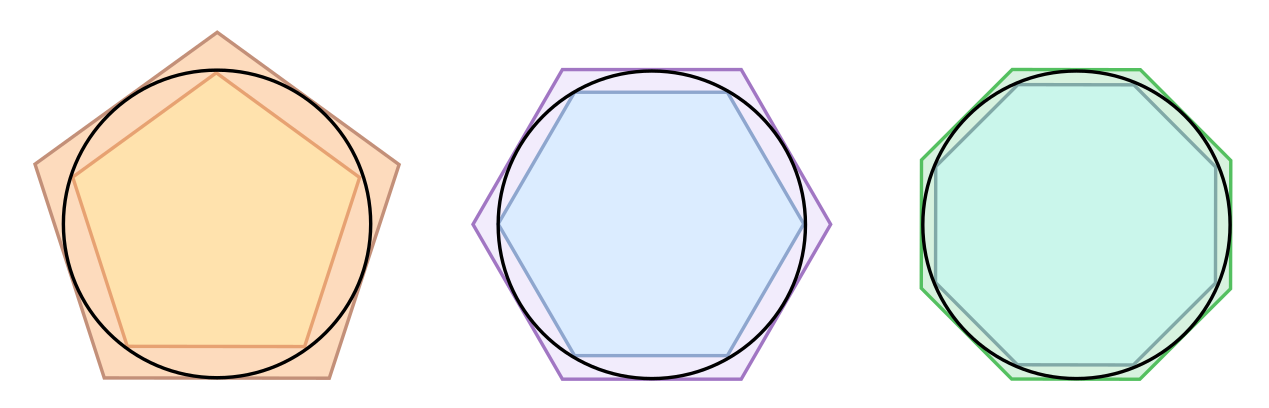

We all remember learning that the decimals of pi are infinite in number, 3.14159265359… Some of us even recall learning that you can approximate upper and lower bounds on the value of π to as high of a degree as you want by measuring the sides of polygons. As the number of sides of the polygon approach infinity and the length of their sides approach zero, your approximation gets better.

Archimedes (c. 287- 212) invented this early ‘polygonal algorithm’, which dominated for over 1,000 years as the most efficient way of computing π to any desired precision. The very primitive and geometric algorithmic function serves the purpose of estimating a real number, pi, whose value must be computed, as it is not expressible as a rational number (fraction/ratio), i.e. it is an irrational number.

The modern study of computability began around 1900 with the announcement of David Hilbert (1862-1943)’s ‘finitist’ program to axiomatize the foundations of mathematics. This after Hilbert himself had showed in 1899 that the consistency of geometry reduced to that of the real numbers, which in turn, reduced to the arithmetic results of Richard Dedekind (Soare, 2013). Hilbert’s program (1904), hence, was established to try to similarly exploit the finiteness of mathematical proofs to show that contradictions could not be derived. If such a foundation could be established, mathematical proofs would be expressible completely in terms of symbols of logic, such as those contained in Alfred North Whitehead (1861-1947) and Bertrand Russell (1872-1970)’s Principia Mathematica. If achieved, all proofs, including those of Fermat’s Last Theorem, the Riemann Hypothesis and the Poincaré Conjecture, would all be inevitable consequences of the mathematical system they are expressed in. In theory, one could build a sufficiently capable machine and let it run until all mathematical truths had be revealed.

Famously, not 30 years later, Austrian logician Kurt Gödel (1906–1978) published a paper, Über formal unentscheidbare Sätze der Principia Mathematica und verwandter Systeme I (“On Formally Undecidable Propositions of Principia Mathematica and Related Systems”), in which his “Theorem VI” shattered Hilbert’s dream of a fully axiomatized foundation for mathematics. Gödel showed, less than a one year after finishing his Ph.D., that such a system—no matter how robust—would always be inherently incomplete. In other words, there will always be statements expressible in a system which are unprovable given its axioms:

First incompleteness theorem (Gödel, 1931a)

Any consistent formal system F within which a certain amount of elementary arithmetic can be carried out is incomplete; i.e., there are statements of the language of F which can neither be proved nor disproved in F.

The paper effectively ended Hilbert’s finitist program. As Soare (2013) recounts, John von Neumann (1903–1957) happened to be in the audience as a representative of Hilbert’s program when Gödel, then 25 years old, took the podium to present his result. von Neumann immediately recognized that Hilbert’s program was over, and spent the next weeks preparing the proof of a related theorem. He had in mind an arithmetization of Gödel’s incompleteness result, to show that not only are formal systems incapable of proving every statement in them, they are also unable to guarantee proofs of their own consistency. He later presented his proof to Gödel, writing “using the methods you employed so successfully […] I achieved a result that seems to me to be remarkable, namely, I was able to to show that the consistency of mathematics is unprovable” (Dyson, 2005). Writing back, Gödel reportedly politely thanked the great man and informed him that he himself (Gödel) had written the same proof weeks earlier, and that it had already been submitted for publication. The theorem states:

Second incompleteness theorem (Gödel, 1931b)

No consistent axiomatic system which includes Peano arithmetic can prove its own consistency.

The theorem demonstrated an inherent fallacy of a second Hilbert program, initiated in 1918 called the Entscheidungsproblem (“decision problem”), of whether it is possible to provide a decision procedure that “allows one to decide the validity of a sentence”(Soare, 2013). Although Hilbert’s program of 1904 ended with Gödel’s proof, Hilbert’s Entscheidungsproblem remained open.

This is where this week’s story begins.

Definitions

Archimedes’ constant (π), along with other well-known numbers such as Pythagoras’ constant (√2) and the golden ratio (φ) are all examples of a type of real number which we say is computable, despite also being irrational (real numbers which cannot be constructed from fractions of integers). Such computable numbers may be defined as:

Computable numbers. Real numbers that can be computed to within any desired precision by a finite, terminating algorithm.

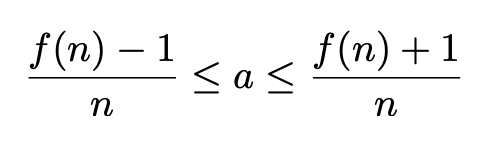

In other words, we define a real number as computable if there is an algorithm which, given n, returns the first n digits of the number. Informally, we can think of a computable number as Marvin Minsky (1927-2016) did, namely as “A number for which there is a Turing machine which, given n on its initial tape, terminates with the nth digit of that number [encoded on its tape]”. Formally, a real number a is computable if it can be approximated by some computable function from the natural numbers N to the real numbers Z which, given any positive integer n, the function produces an integer f(n) such that:

An equivalent definition of computable numbers is that there exists a function which, given any positive rational error bound ε produces a rational number r such that the absolute value |r - a| is less than or equal to the error bound ε.

History

Just as Georg Cantor (1845-1918) had spent the latter part of of the 19th century studying the uncountable sets that arose from his invention of the diagonal argument, researchers at Princeton University would spend the 1930s studying the implications of Gödel’s result for Hilbert’s Entscheidungsproblem and uncomputable numbers (Soare, 2013).

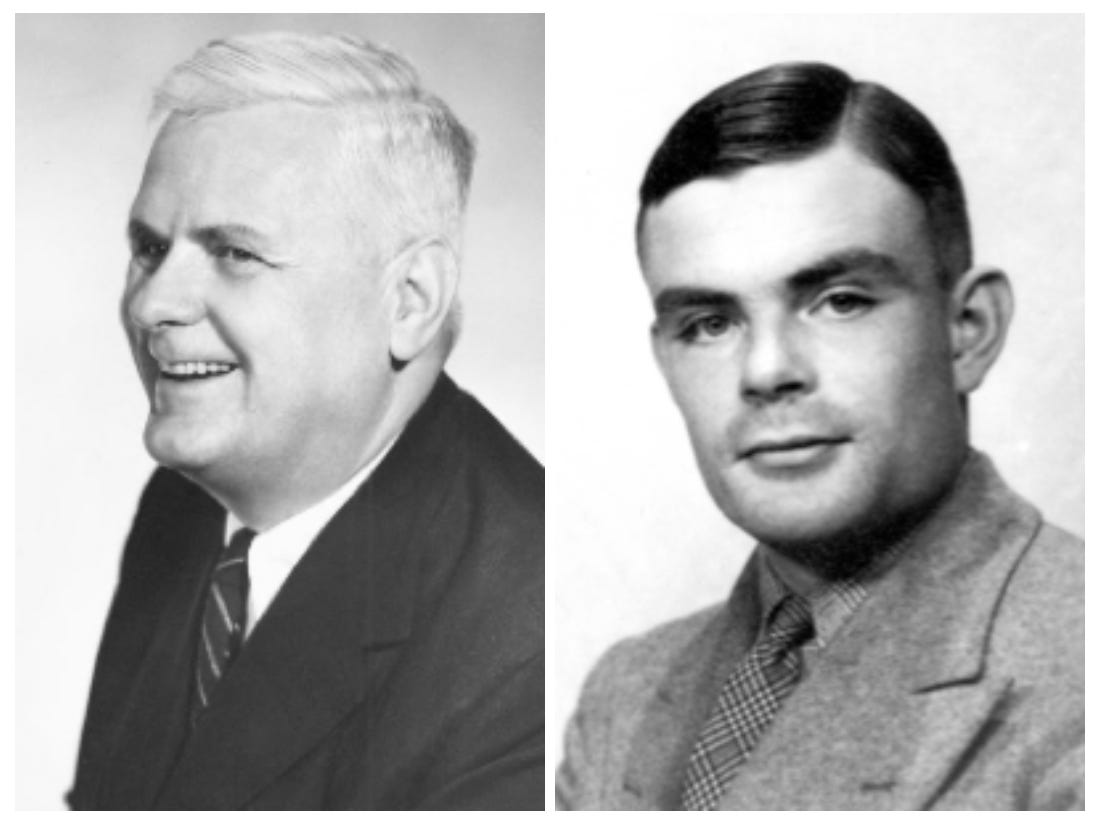

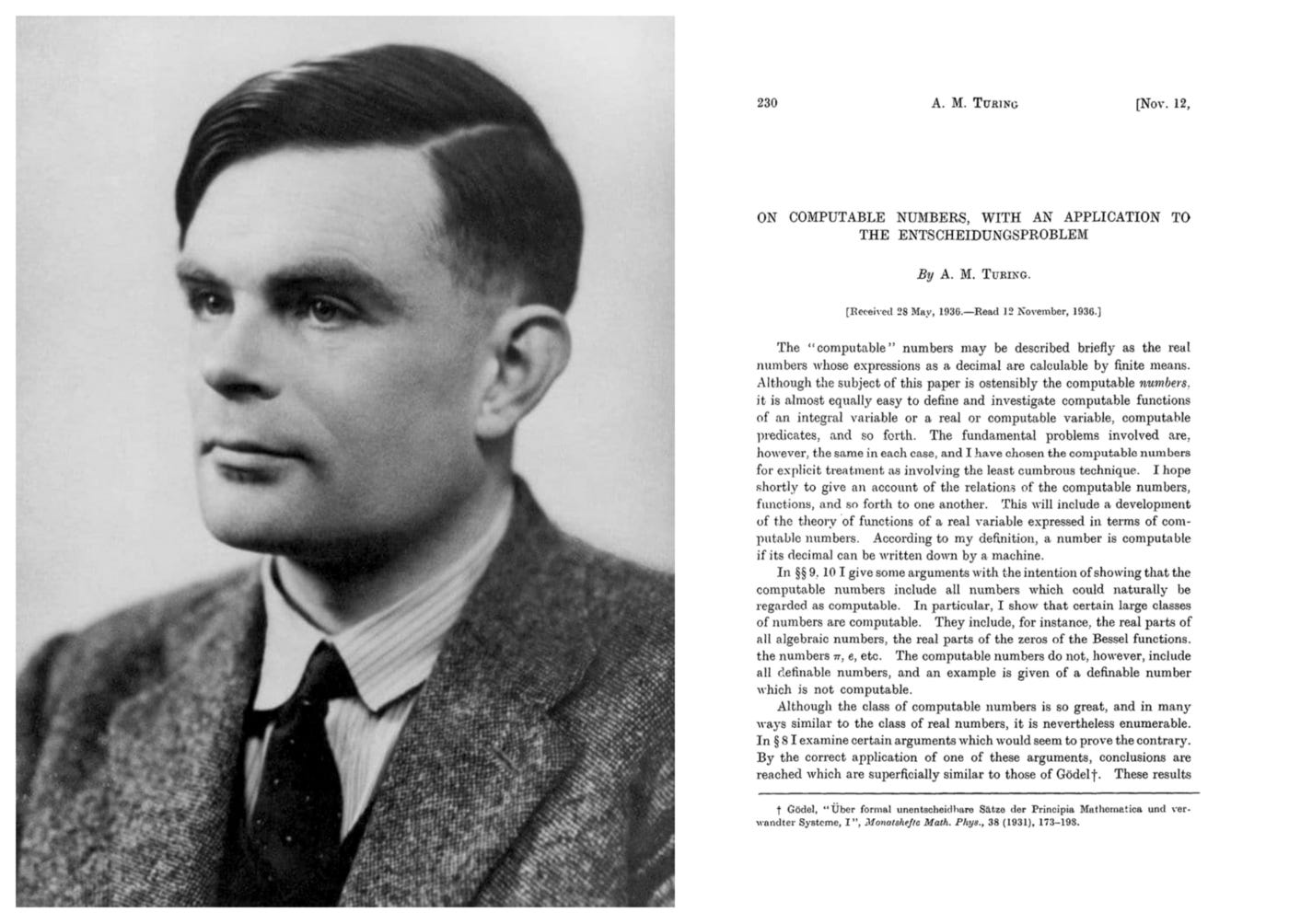

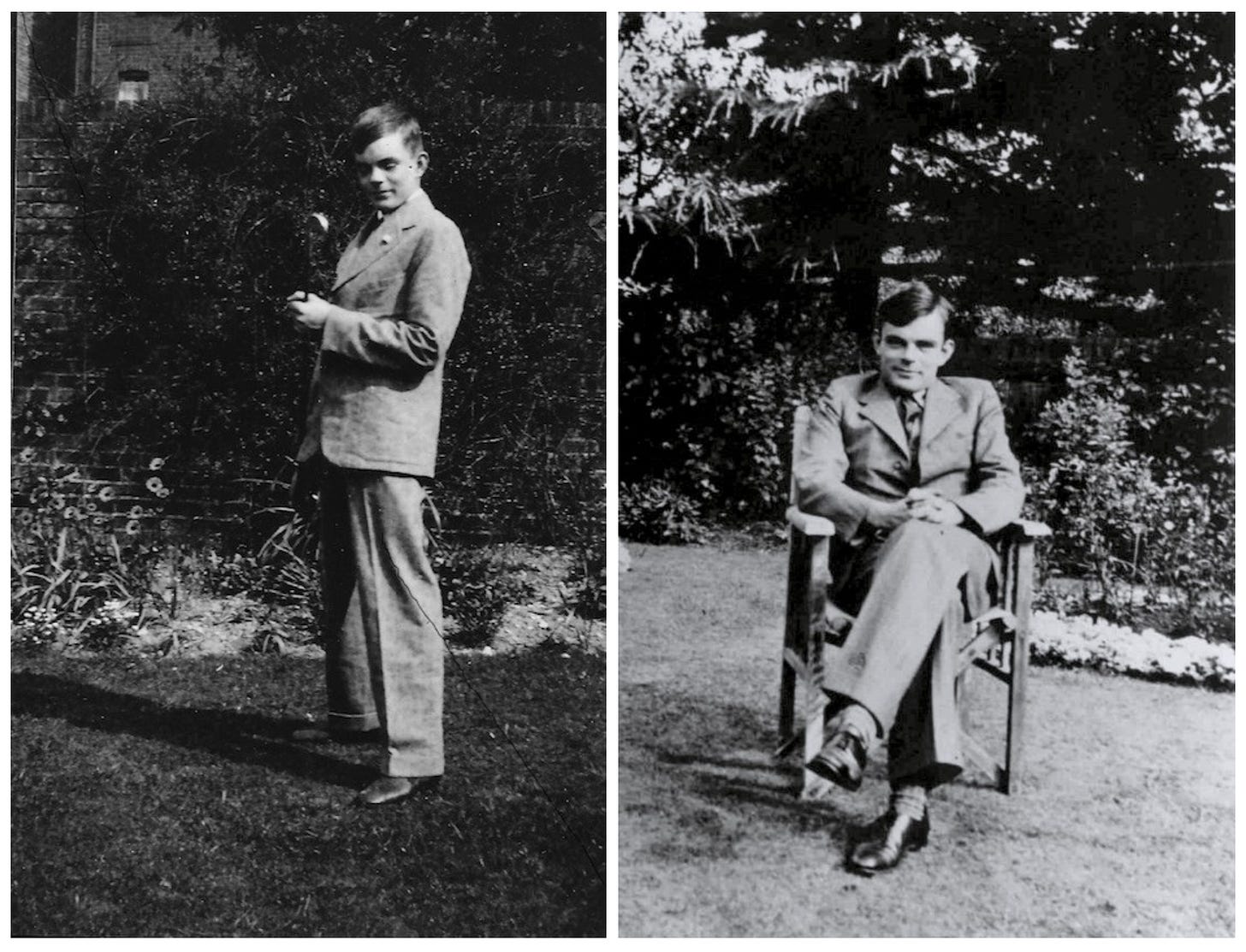

Alonzo Church (1903–1995) was an American mathematician and logician who earned his Ph.D. under Gödel’s friend and colleague Oswald Veblen (1880-1960) at Princeton University in the 1920s. His first published paper was on Lorentz transformations. His most well known results include the proof that Hilbert’s decision problem (Entscheidungsproblem) is undecidable (known as Church’s theorem), that Peano arithmetic is undecidable, his invention of λ-calculus and his articulation of what would come to known as the Church-Turing thesis about the nature of computable functions. Alan Turing (1912–1954) was an English mathematician most well known as the tour de force behind the successful Allied effort to break the Axis communication encryption device Enigma. For mathematicians, Turing’s name is synonymous with genius mostly for his visionary work in pure mathematics and logic 10 years prior. Turing was born in London in 1912, and by the time he was 17 years old was off to King’s College, Cambridge to study mathematics. He graduated with first-class honors in 1934 and in the same year was elected a fellow of King’s based solely on the strength of his thesis, which proved the Central Limit Theorem. He published his later renowned paper “On Computable Numbers, with an Application to the Entscheidungsproblem” in 1936. It was published in two parts in the Proceedings of the London Mathematical Society journal on the 30th of November and 23rd of December 1936.

In September of the same year, Turing travelled to Princeton University to study for a Ph.D. in mathematics under Church. He obtained his Ph.D. in 1938. His dissertation was entitled “Systems of Logic Based on Ordinals” which introduced ordinal logic and the notion of relative computing — augmenting his previously devised Turing machines with so-called oracle machines, allowing the study of problems that lay beyond the capability of Turing machines. Although inquired about by von Neumann for a position as a postdoctoral research assistant following his Ph.D., Turing declined and instead travelled back to England.

Definitions of Computability

As Robert I. Soare recounts (Copeland et al, 2013 p. 207), by 1931 the field of logic and computability was a young man’s game. Gödel, born in 1906, was 24 years old when he proved the incompleteness of formal systems in 1930. Church was 33 when he proved the undecidability of the Entscheidungsproblem with his invention of the concept of “effective calculability” based on his λ-calculus. Turing, independently, proved the same result in the same year with his invention of Turing machines. Born in 1912, he was 24 at the time.

Entscheidungsproblem

The concept of an Entscheidungsproblem—the problem of finding an algorithm that is able to determine the truth or falsity of an input based on a finite set of axioms—dates back to the seventeenth century when it was first imagined by Leibniz (Davis, 2000). Later, for various formal systems, the problem had also been posed by Schröder in 1895, Löwenheim in 1915, Hilbert in 1918 and Behaman in 1921 before Hilbert and Ackermann together published a formal statement in 1928 in Grundzuge der theoretischen Logik (“Principles of Mathematical Logic”):

The Entscheidungsproblem is solved when we know a procedure that allows for any given logical expression to decide by finitely many operations its validity or satisfiability.

Church’s Solution

Turing’s thesis advisor Alonzo Church first began working on the problem in 1933. He presented his proposed solution featuring what he called ‘effectively calculable functions’ to Gödel around March of 1934. Supposedly, Gödel rejected Church’s approach as “thoroughly unsatisfactory” (Soare, 2013). In the postscriptum to Gödel’s notes from a lecture held at the Institute for Advanced Study (IAS) in 1934, he repeats, but reiterates in even more bold terms his dissatisfaction with Alonzo’s approach.

Based on λ-calculus, Church’s first definition of computability said:

First definition of computability (Church, 1934)

A function is effectively calculable if and only if it is λ-definable.

Church and his other graduate student Stephen C. Kleene (1909-94) had worked together on defining functions as λ-definable since 1931. By 1934, they had shown that all the usual number theoretic functions were λ-definable. Yet still, as Gödel knew, primitive recursive functions as stated in his own 1931 paper did not include all computable functions and so would be an insufficient foundation for claiming the effective computability of all numbers. To account for this, Gödel in the spring of 1934 expanded on a concept introduced by the brilliant Jacques Herbrand (1908–1931) to define Herbrand-Gödel recursive functions (now known simply as recursive functions), improvements on those defined in Gödel’s 1931 paper (now called ‘primitive’ recursive functions). After attending lectures on the subject held by Gödel, Church and Kleene eventually revised their definition of computability to be based instead on Herbrand-Gödel recursive functions (Soare, 2013):

Second definition of computability (Church, 1936)

A function on the positive integers is effectively calculable if and only if it is recursive.

Church and Keene would later be able to show that the λ-definable functions they had used in their first definition and the Herbrand-Gödel recursive functions they used in their second were formally equivalent. However, Gödel never came to accept Church’s definition of computing (Gödel, 1995), disagreeing fundamentally with its approach. The key weakness of Church’s argument according to Sieg (1994, p.80) was that “steps taken in a calculus must be of a restricted character and they are [here] assumed without argument to be recursive” adding that “this is precisely where we encounter the major stumbling block for Church’s analysis”, a stumbling block that was “quite clearly seen [both] by Church” himself, and Gödel.

Turing’s Solution

More or less simultaneously as this debate was taking place in Princeton, Alan Turing arrived to work on the same problems, of both

1. Attempting to settle Hilbert’s Entscheidungsproblem; and in the process

2. Provide a complete, final definition of computability.

As Max Newman (1897-1984) wrote to Church on May the 31st 1936,

“Dear Professor Church.

An offprint which you kindly sent me recently of your paper in which you define ‘calculable numbers’, and show that the Entscheidungsproblem for Hilbert logic is insoluble, had a rather painful interest for a young man, A.M. Turing, here, who was just about to send in for publication a paper in which he used a definition of ‘computable numbers’ for the same purpose. His treatment—which consists in describing a machine which will grind out any computable sequence— is rather different from yours, but seems to be of great merit, and I think it of great importance that he should come and work with you next year if that is at all possible. He is sending you the typescript of his paper for your criticisms. […] I should mention that Turing’s work is entirely independent: he has been working without any supervision or criticism from anyone.”

It is telling that, as Hodges (1983) writes, “There was no one in England who could referee the paper for publication, and in fact Church himself was the only person who could reasonably do so”. Turing’s paper was accepted and published despite its conclusions overlapping with those of Church, as their methods were sufficiently different. When published in the Proceedings of the London Mathematical Society 42 on November 30th 1936, Turing’s paper defined computability as (Soare, 2013):

Turing's definition of computability (1936)

A function is intuitively computable (effectively computable) if and only if it is computable by a Turing machine; that is, an automatic machine (a-machine) as defined in Turing (1936).

Turing in his paper notes the similarity in his definition to that of Church’s paper published seven months before, stating: “In a recent paper Alonzo Church has introduced an idea of “effective calculability” which is equivalent to my “computability”, but is very differently defined.” adding “Church reaches similar conclusions about the Entscheidungsproblem”.

Church invited Turing to give a seminar on his discovery and methods to the Princeton mathematicians. Alan accepted, writing later that “I hope I shall be able to get an opportunity to do this, as it will bring the thing to people’s attention a bit”. Turing did present his findings, although unfortunately in poorly attended seminar. He later wrote home that “I was disappointed by its reception here. I expected Weyl who had done some work connected quite closely with it some years ago, at least to have made a few remarks”. Hermann Weyl (1885-1955), like von Neumann, had worked on Hilbert’s program before Gödel’s result made him lose interest, claiming once that he never read another paper in logic after 1931.

Unlike Church and Kleene, Turing’s definition of computability did not rely on the recursive functions of Gödel (1931) or Herbrand-Gödel (1934). Rather, Turing instead presented an analysis of how humans would go about carrying out a calculation, showing step by step that the same procedure could also be conducted by a machine. In terms of the rigorousness of this analysis, Gandy (1988) later wrote “Turing’s analysis does much more than provide an argument, it proves a theorem”, without “making any references whatsoever to calculating machines”. Instead, “Turing machines appear as a result, a codification, of his analysis of calculations by humans” (Soare, 2013).

Turing had invented a new formal model, his concept of an a-machine or a Turing machine. A Turing machine may be defined informally as:

A Turing machine (a-machine) is a mathematical model of computation that defines an abstract machine which manipulates symbols on a strip of tape according to a table of rules. Despite the model's simplicity, given any computer algorithm, a Turing machine capable of simulating that algorithm's logic can be constructed.

Turing’s definition aligned much more closely with Gödel’s view of computation (Soare, 2013):

"But Turing has shown more. He has shown that the computable functions defined in this way are exactly those for which you can construct a machine with finite number of parts which will do the following thing. If you write down any number n₁, n₂, ..., nᵣ on a slip of paper and put the slip into the machien and turn the crank then after a finite number of turns the machine will stop and the value of the function for the argument n₁, n₂, ..., nᵣ will be printed on the paper."

As Gödel later wrote in a letter to Martin Davis (1928-):

"In consequence of later advances, in particular of the fact, that, due to A. M. Turing's work, a precise and unquestionably adequate definition of the general concept of formal system can now be given, the existence of undecidable arithmetical propositions and the non-demonstrability of the consistence of a system in the same system can now be proved rigorously for every consistent formal system containing a certain amount of finitary number theory.”

Uncomputability

While we know that the set of real numbers is uncountable, the set of computable numbers is countable, and thus we know that most real numbers are not computable. The proof that the computable numbers is countable arises intuitively from the fact that they may all be produced by Turing machines, of which there are only countably many variations (i.e. they can be put into one-to-one correspondance with the natural numbers). Formally:

Proof sketch of the countability of the computable numbers

By assigning a Gödel number to each Turing machine definition produces a subset S of the natural numbers corresponding to the computable numbers. A surjection from S to the computable numbers shows that the computable numbers are at most countable.

Chaitin’s constant Ω

Argentine-American mathematician Gregory Chaitin in 1975 proposed a real number that is not computable, now called Chaitin’s constant, omega Ω. It represents the probability that a randomly constructed program will halt/terminate:

Chaitin's Thought Experiment (Barmpalias, 2018)

Suppose we run a universal Turing machine on a random binary program. Specifically, whenever the next bit of the program is required, we flip a coin and feed the binary output to the machine. What is the probability that the Turing machine will halt?

Chaitin’s thought experiment is a prototypical example of a halting problem. A halting problem is the problem of determining, from a description of an arbitrary computer program and an input, whether the program will finish running (halt) or continue to run forever. In the context of self-delimiting machines the halting probability Ωᵤ of a Turing machine U takes the following expression:

Where σ represents random finite binary programs and U(σ)↓ denotes the fact that U will halt on input σ.

In Turing’s 1936 paper he proved that a general algorithm to solve the halting problem for all possible program-input pairs cannot exist. No halting probability is computable. The proof of this fact relies on an algorithm which, given the first n digits of Ω, solves Turing’s halting problem for programs of length up to n. Since the halting problem is undecidable, Ω cannot be computed.

The Busy Beaver Problem BB(n)

Tibor Radó in a 1962 paper entitled “On Non-Computable Functions” posed the following problem:

What is the largest finite number of 1s that can be produced on blank tape using a Turing machine with n states?

The problem has later come to be known as the Busy Beaver problem. As a game, the problem is often stated as: finding a terminating program of a given size that produces the most output possible. The problem has a natural expression as a mathematical function BB(n) where if n represents the number of states, let BB(n) represent the largest finite number of 1s that can be written on blank tape by a machine of that size.

So, BB(1) = 1, BB(2) = 4, BB(3) = 6.

For BB(4) the problem gets much harder. Proving that BB(4) = 13 was a Ph.D. thesis. The value of BB(5) not known, but at least 4098 (found by Marxen & Buntrock in 1989), and BB(6) at least 3.5 x 10¹⁸²⁶⁷ (found by Kropitz in 2010).

The BB(n) function is an example of an uncomputable function. That is, there is no Turing machine Mᴮᴮ that computes the BB function. This is provable by contradiction:

Proof that BB(n) is not computable (Roberts, 2016)

We start by assuming that the Busy Beaver function BB(n) is computable, and that there exists a Turing machine Mᴮᴮ with β states that takes n as an input, writes BB(n) number of 1s as output and then halts. Let Clean denote a Turing machine cleaning sequence of 1s initially written on the tape. Let Double denote a Turing machine evaluating function n + n.

Given a tape with n 1s it will produce 2n 1s on the tape, then halt. Now create the routine 'Double | EvalBB | Clean' and let n₀ be the number of states of this machine. Let Create_n₀ denote a Turing machine creating n₀ 1s on an initially blank tape. Let N denote the sum n₀ + n₀.

Let BadBB denote the routine 'Create_n₀ | Double | EvalBB | Clean'. This machine has N states (n₀ + n₀). Running BadBB will produce BB(N) 1s on the tape before clearing all 1s and halting. But the step of cleaning will continue at least BB(N) steps, so the computation time of BadBB must be strictly greater than BB(N), which contradicts the definition of the function BB(n).

Apart from being an interesting and challenging mathematical game, the Busy Beaver problem also has intriguing implications in practice. For Hilbert’s Entscheidungsproblem, suppose any mathematical conjecture can be disproven via counterexample/existence proof among a countable number of cases (e.g. Goldbach’s conjecture). If such conjectures are true, the Turing machine looking for counter-examples will never halt. If it is not true, it will halt and notify us that it has found a counter-example. If we simulate an n-state Turing machine and know the value of BB(n) we can decide whether or not it will ever halt by running the machine that many steps. If, after BB(n) steps the machine does not halt, we know that it will never halt and thus that there are no counterexamples, which would prove the conjecture to be true. However true in theory, this is not currently achievable in practice. Wikipedia lists two reasons:

- It is extremely hard to prove values for the busy beaver function (case in point, BB(6) having at least 3.5 x 10¹⁸²⁶⁷ value) and it is assumed that one would need at least 20–50 states to make a useful problem-solving machine. Furthermore, the few states that are solved were solved using a manual checking method for each n-state Turing machine, which would not be practically possible for a machine with larger states.

- Even if one does find a more efficient way of calculating BB(n) states, the value of the function get very large, very fast (we know that BB(17) is larger than G, Graham’s number). Even BB(6) would require more computational capacity than exists in the known part of the universe to be performed directly (Lloyd, 2001).

Penrose Tiling

A Penrose tiling is an example of non-periodic tiling generated by an aperiodic set of prototiles, named after mathematican/physicst and 2020 Nobel Prize laureate Sir Roger Penrose (1931-) Because such patterns are non-periodic, they lack translational symmetry. They are also self-similar (“fractal”), as they appear the same at larger and larger scales.

The question of whether a set of tiles will cover the plane was conjectured by Hao Wang in 1961. The problem was later shown to be uncomputable. The proof relies on using tiles to simulate a Turing machine, configuring the tiles in such a way that they cover the complete plane only if the Turing machine runs forever starting with a blank tape. Since the Halting problem is undecidable, the tiling problem must be undecidable and so also uncomputable.

Epilogue

Today, in mathematics and computer science, Turing machines are the primary formalism for defining computable functions (Soare, 2013 p. 213). However, in recognition to Church for grasping the essence of such functions first, the modern-day formulation of the hypothesis about the nature of computable functions is now known as the Church-Turing thesis:

Church-Turing thesis. A function is intuitively computable if and only if it is computable by a Turing machine, or equivalently if it is specified by a recursive function.

One of its implications is that:

No method of computing carried out by a mechanical process can be more powerful than a Turing machine.

Although widely adopted, as there is no clear way to prove or disprove its validity the proposition still remains a conjecture.

Those interested in learning more about computability and the history of the topic are encourage to acquire the book Computability: Turing, Gödel, Church and Beyond* by Copeland et al (2013). The best biography of Turing remains—in my opinion—Andrew Hodges’ Alan Turing: The Enigma*. The essay ‘Turing and the Uncomputable’ in The New Atlantis is also quite good.

References

- Barmpalias, G. 2018. Aspects of Chaitin’s Omega. Working paper.

- Church, A. 1936. An Unsolvable Problem of Elementary Number Theory. Journal of Mathematics 58 (2). pp. 345–363.

- Copeland, BJ., Posy, CJ., Shagrir, O. 2013. Computability. Turing, Gödel, Church, and Beyond. MIT.

- Gödel, K. 1931a. Über formal unentscheidbare Sätze der Principia Mathematica und verwandter Systeme I. Monatshefte für Mathematik und Physik 38: 173–98.

- Hilbert, D. 1899. Foundations of Geometry.

- Hilbert, D. and Ackermann, W. (1928).Grundzuge der theoretischen Logik.

- Hodge, A. 1983. Alan Turing: The Enigma*. Burnett Books.

- Lloyd, 2001. Computational Capacity of the Universe.

- Roberts, 2016. Uncomputational Functions. Handout #15. October 24, 2016

- Sieg, U. 1994. Aufstieg und Niedergang des Marburger Neukantianismus: die Geschichte einer philosophischen Schulgemeinschaft.

- Soare, R.I, 2013. Interactive Computing and Relativized Computability in Computability. Turing, Gödel, Church and Beyond. MIT. pp. 203.

- Turing, A. 1936. On computable numbers, with an application to the Entscheidungsproblem. Proceedings of the London Mathematical Society 42.

- Wang, H. 1961. Proving theorems by pattern recognition — II, Bell System Technical Journal, 40 (1) pp. 1–41,

- Whitehead A. & Russell, B. 1910. Principia Mathematica, vol. I

- Whitehead A. & Russell, B. 1912. Principia Mathematica, vol. II

- Whitehead A. & Russell, B. 1913. Principia Mathematica, vol. III

* This essay contains Amazon Affiliate Links