The Two Envelopes Problem or Necktie Paradox

Should I switch or should I stay? The Switching Paradox

There are two envelopes. You are allowed to pick one and have a look at the amount of money it contains. You take a look and see $20.

I tell you that one of the envelopes contains twice as much money as the other and offer you to switch envelopes.

Should you switch?

Shouldn’t make a difference, should it?

Because the problem is completely symmetrical, isn’t it? After all, it should be a 50 % chance that the $20 I have is the lower amount and a 50 % chance that these $20 are the higher amount. So of course, switching shouldn’t change a thing and the obvious answer is that you wouldn’t gain a thing (on average) from switching to the other envelope.

But what if I tell you, that by switching, you will increase the expected amount of money that you win from $20 to $25?

Because obviously, if $20 is the higher amount of money, you then get $10. If the $20 is the lower amount of money, then you win $40.

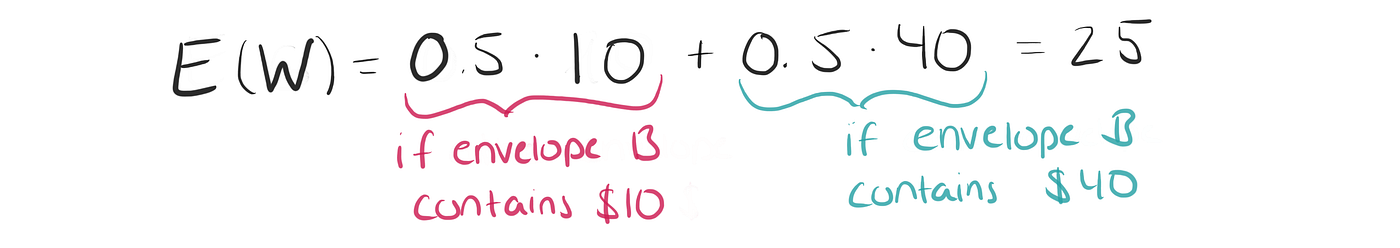

All in all, this equals a total expected win W of

Since we have $20 in our hands and switching would mean that, on average, we get $25 instead, switching envelopes would be the reasonable thing to do.

Maybe you’re just as startled as I was when I first heard about this problem. After all, this seems absurd. Even more absurd than the famous Monty Hall problem (which in fact, can be explained very nicely).

Thinking more thoroughly about it, the above reasoning must be wrong. Because after all, if swapping envelopes always led to an expected gain, the reasonable thing to do would be infinite swapping with an infinite amount of money gained.

Don’t be fooled, infinite swapping is not the solution and we do not gain anything by swapping envelopes.

Also, the above calculation for the expected value is, indeed, wrong. The interesting question remains: Why?

Let’s start with a simple, intuitive explanation.

Let’s say x is the amount of money to be found in one envelope and 2x is the amount of money found in the other envelope.

x = money in one envelope

2x = money in other envelope

If we first picked the envelope with x dollars in it, we would gain another x dollars by switching. If we first picked the envelope with 2x dollars in it, we would lose x dollars by switching.

In total, this leads to an expected gain G of

which makes total sense. So what went wrong in our above reasoning?

First Approach: Calculating Expected Values and Including Conditional Probabilities

The problem lies within the definition of the random variable or by omitting conditions.

Just because we know that one envelope contains twice as much as the other, it does not mean that there is a 50 % chance that the other envelope contains 2 times the amount that we saw when we opened the first envelope. It just means that there is a 50 % chance that what we saw is the amount 2x and there is a 50 % chance that it is only the amount x.

Let’s first calculate the expected amount of money contained in envelope A.

There is a 50 % chance that envelope A contains amount x and there is a 50 % chance that envelope A contains 2x. Thus, the expected amount of money in envelope A is

Before opening envelope A, the same holds true for envelope B

What happens now after opening envelope A?

Before opening envelope A, the expected value in each of the envelopes is 1.5x.

The expected value of B should not have changed, and in fact, it hasn’t. It just gets a bit more complicated to calculate it after opening envelope A. Because now, we have to use conditional probabilities:

Yet, we still arrive at the same conclusion and the same expected value of 1.5x for the amount of money contained in B.

On a more basic level… what went wrong in the first calculation?

Let’s go back to the beginning. Even though the explanation in the previous section explains quite well what the correct answer is, I personally don’t find it 100 % satisfying yet. Because in my opinion, it does not fully explain why my first attempt to calculate the expected value, is plain wrong.

The real explanation lies in the definition of random variables. In the first calculation, I assumed the amounts of money x and 2x that can be found in the envelopes to be a random variable. But they are not. In fact, these values are fixed (but unknown) values. Thus. there is no (general) 50 % chance that the unopened envelope contains twice as much money. There is a 100 % chance that the unopened envelope contains twice as much money as the first one if we first open the envelope with x money in it.

Also, there is a 0 % chance that the unopened envelope contains twice as much money as the first one if we opened the envelope with 2x money.

The amount of money x is not a random variable. It is a fixed value.

That’s why we use a small letter to describe it. However, the amounts of money contained in envelopes A and B are random variables. That’s why we use capital letters for these amounts.

There is no 50 % chance that envelope B contains $40. It either contains $40 or it doesn’t. It either contains $10 or it doesn’t. We simply don’t know. The amount x is fixed, not random. It doesn’t have an underlying probability distribution. Hence, we use a small letter, not a capital one.

It’s a small difference, but an important one to understand. Understanding this difference helps not only to not get fooled by such confusing, yet simple paradoxes, but it also helps to understand frequentist statistics better!

The Original Problem

The problem was originally called the necktie problem. Two men were given neckties as a Christmas present by their wives and argued about who had the cheaper and who had the more expensive necktie. They decided to go home to their wives and ask about the prices.

They also agreed that whoever had the more expensive necktie had to give it to the other man.

The first man reasoned that in the worst-case scenario, he would lose his necktie. But in the better case, he would also win the other, more expensive, necktie, thus winning more than what he already had.

The second man used the same reasoning. Thus they both believed that the bet was in their favor.

It’s easier to understand the problem using envelopes and money, but, the reasoning to explain where the problem (or its solution) goes wrong, stays the same.

There are many more explanations to debunk this paradox. What’s your favorite one?