Let’s Derive the Power Rule from Scratch!

From nothing but the definitions of derivatives and exponentiation, we’ll prove one of the first derivative rules you’ll learn in college

From nothing but the definitions of derivatives and limits, we’ll prove one of the first derivative rules you’ll learn in undergrad calculus.

If you wish to make an apple pie from scratch, youmust first invent the universe. — Carl Sagan

When most students first see the power rule in Calculus, the rule is usually offered without proof or with a partial proof. If these students are lucky, a proof for all the cases will be given several chapters later. I understand why this gap exists, but students would learn much more from a full proof without the advanced concepts. Even if you find the proof given in these textbooks to be sufficient, another proof couldn’t hurt. In this proof, I will not only prove the power rule, but I will also

- prove the product rule,

- introduce students to proof by induction,

- prove the chain rule,

- introduce a little bit of real analysis (you shouldn’t need to be a math professor to keep up),

- and show students some useful techniques they can use in their own proofs.

The Rules

For this proof, I won’t allow myself to use anything besides

- the definition of a limit,

- the definition of the derivative,

- and anything you would know from a standard algebra course,

- including the rules of exponents and the properties of various algebraic structures (integers, rational numbers, and real numbers).

These constraints will prevent me from using

- the derivative of a logarithm,

- the derivative of the exponential function,

- or the binomial theorem.

Most proofs I’ve seen use at least one of these.

Proof Structure

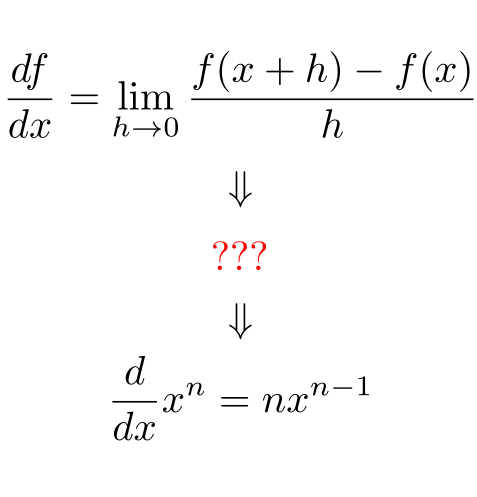

Instead, my proof will have the following structure:

- Prove the product rule.

- Prove the case where n is an integer using the product rule with some induction.

- Prove the chain rule.

- Prove the case where n is a rational number using the chain rule.

- Prove the case where n is an irrational number, thereby proving the power rule for all real numbers.

The Product Rule

Remember that x⁴ = x • x³. If we know how to take the derivative of x, x³, and the product of two functions, we can take the derivative of x⁴. For this reason, we will prove the product rule.

We want to prove the product rule from the definition of the derivative. More specifically, we’re looking for some expression of the two functions or their derivatives. First, we’ll define a function z(x) = f(x)g(x). Then, we’ll take the derivative of z with respect to x. Since we’re talking about arbitrary functions, we have to use the definition of the derivative.

Nothing may jump out at you, in which case we look for some way to rewrite the expression in a different form. Since we have an f(x + h) and a g(x + h) in our expression, we should try to somehow get either f(x + h) - f(x) or g(x + h) - g(x)into our expression. Doing so will let us replace them with a derivative. In this case, we can use the classic technique of adding a zero. For example, we could add f(x + h) - f(x + h) to the numerator and nothing would change. We’ll want to add ( f(x + h) g(x) - f(x + h) g(x) ) to the numerator, at which point we can do the algebra, which looks like

To make things easier on us, I’m going to handle each limit separately and put them back together. The first limit is

and the second limit is

We have therefore proven the product rule, which is shown below.

If you want some visual intuition for why the product rule takes this form, check out 3blue1brown’s video on the Product Rule and the Chain Rule.

Proving the Case Where n is an Integer

We have three cases:

- n = 0

- n > 0

- n < 0

If we prove each case, we’re done with this part.

Proving the Case Where n = 0

And we’re done with that.

Proving the Case Where n > 0

If we were to take the derivative of a large number of functions like x, x², x³, etc. using the limit definition of the derivative, you might see these derivatives follow a simple pattern: the power rule. Since we’re only looking at natural numbers and proving cases where n = 0 and n = 1 is trivial, we might want to try a proof by induction.

Proof by Induction

To prove something with induction, you

- prove a base case

- and show that each case proves the next case (weak induction) OR show that all the proven cases prove the next case (strong induction).

Don’t let the names fool you, strong and weak induction are equivalent, but I can’t go into the details in this article. For this proof, we’re going to use weak induction. After showing you this proof, I will try to give you an intuitive sense of why it works. In doing so, I’m going to break with tradition slightly. Normally, a weak induction proof refers to the cases n and n + 1 in step 2, but I will refer to the cases n - 1 and n. Replacing n with n + 1 will convert the expression back to the traditional form.

The Base Case

This section will be quick, as it’s just algebra.

The Induction Step

In this part of the proof, we’ll prove that if the power rule holds for n = m - 1, then the case for m is also true. I’ve chosen to use m instead of n for this part since I’ve already used n for the power of x. If the power rule didn’t hold for n = m - 1, then it wouldn’t matter if the case for n = m is true, so we will assume that the power rule does hold for n = m - 1. The proof then looks like

An Intuitive Explanation of Induction

Say you’re not convinced that this proof is valid. If so, pick any natural number. While this argument will work for any number you choose, I’m going to show you the argument for n = 3 and you should see the general pattern. First, you should agree that I’ve proven the case n = 1 by going through the algebra in the subsection The Base Case. Now, I will show you the proof in the induction step for the specific case where n = 3.

Everything about this proof should look fine except for me going from the second to the third line. If you’re not convinced about the n = 2 case, then we can reuse the proof in the induction step for the specific case where n = 2.

I’ve only used the power rule for the case n = 1, so you should be convinced that the power rule has been verified for the case n = 3 (and n = 2).

If you happen to be a computer scientist or a programmer, you might recognize this as a recursive argument. In many cases, induction and recursion can both describe something, but they will go in opposite directions.

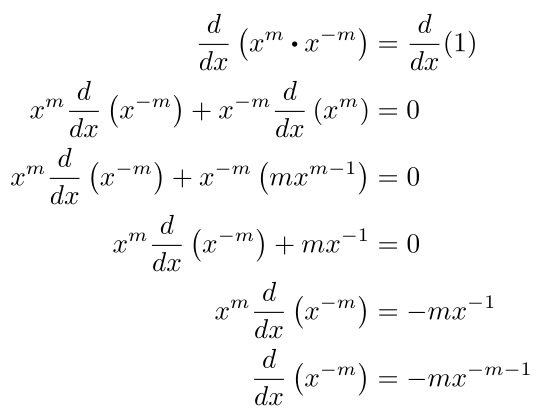

Proving the Case Where n < 0

Now we could use the quotient rule to prove this case, but the product rule is easier to remember and use. Instead, we’re going to use the following fact:

Note that the above statement is only true when x ≠ 0, as 0/0 is undefined. These functions do not have well-defined derivatives at x = 0, so we don’t care. We can take the derivatives of both sides, use the product rule, and solve for the derivative.

At this point, we’ve proved the power rule for all integers.

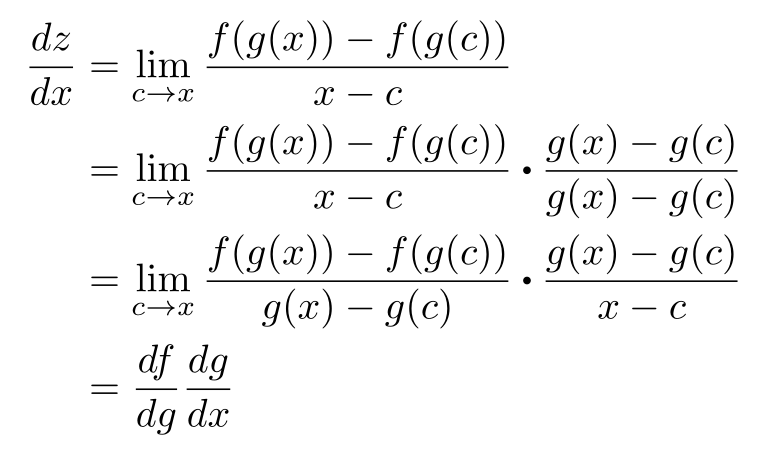

Proving the Chain Rule

The method used to prove the product rule worked, so let’s try something similar. I’ll save us some trouble and define h = c - x. Since we wanted the case where h → 0, we’ll want c - x → 0, which is equivalent to c → x. Furthermore, x + h = c. Plugging everything into the definition of the derivative gets us

You might realize that as c approaches x, g(c) approaches g(x). If you’ve taken a few example derivatives that would have functions of functions, you might notice a pattern. (Try taking the derivatives of (x + c)³ or (x² + c)² and then factor out (x + c) or (x² + c) where c is a constant.) You might get the idea that you’ll want to take the derivative of the outer function with respect to the inner function, which would look like

If you define a new h = g(x) - g(c) and note that as c approaches x, h approaches 0, you can rewrite the derivative above as follows

Since g(c) is just a number, this expression is the derivative of f(x) at x = g(c). We know how to evaluate this expression, so if we go back to the original derivative, we’ll want to get g(x) - g(c) on the bottom. We can use another classic technique in this case: multiplying by one. Just as adding a zero didn’t change anything, multiplying by one shouldn’t change anything either. We can choose many expressions that equal one, but (g(x) - g(c))/(g(x) - g(c)) will get us the right answer.

Finally, we end up the chain rule:

Problems with this Proof

Abstractions allow you to work on any system that fits your abstraction as long as you follow its rules. Remember that we use an a near x such that g(a) = g(c), then we didn’t actually multiply by one — we multiplied by 0/0, which is undefined. For what we’re doing, this proof of the chain rule is still valid since g(a) = g(c)only when a = c. You would see a problem if you tried to take the derivative of a function like sin( 1/x ) at x = 0 since you’ll never be able to find a region around x = 0 for which the function is defined in the entire region. To get around this restriction, you plug the holes through analytic continuation. It doesn’t matter for us in this proof, so I’ll move on.

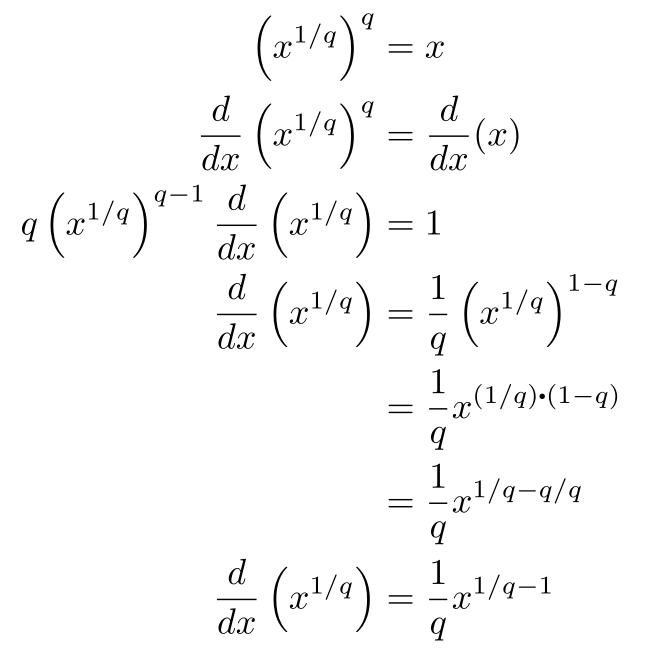

Proving the Case Where n is Rational

We’re going to use a similar trick to the one where n < 0. For example, we know that if we can take the qth root of a number and raise it to the qth power, we should get the number we started with. In mathematics, that statement would look like

No one would try to take the derivative of a function where it doesn’t exist, so we will only care about the derivative where the function exists. We’ll follow the same process in the case where n is a negative integer. We’ll take the derivatives of both sides, use the chain rule, and solve for the derivative.

To get all rational numbers, we then consider what happens when n = p/q, where p and q are integers:

and we’re done with this section of the proof.

Proving the Case Where n is Irrational

To quote the Wikipedia article for the power rule in the section Proof for Real Exponents:

Although it is feasible to define the value [f(x) = x^r] as the limit of a sequence of rational powers that approach the irrational power whenever we encounter such a power, or as the least upper bound of a set of rational powers less than the given power, this type of definition is not amenable to differentiation.

We’re going to define the value as the limit of a sequence of rational powers that approach an irrational power. This part of the proof may have some errors as I have never seen anyone prove it in this manner, so let me know in the comments if I made a mistake.

Every irrational number can be represented as the limit of a sequence of rational numbers. So now, let’s set up some definitions:

If r = π, then R4 = 3.1415. If r = sqrt(200), then R3 = 14.142. In other words, Rkgets you k digits after the decimal point. It’s easy to see that the limit of this sequence of rational numbers is r, which you can prove since the difference between Rk and r goes to zero. So now, we have two limits we want to take, k→ ∞and h→ 0. If we take the h limit first, we end up with

If we take the k limit first, we end up with

You might think that the order of limits doesn’t matter (they don’t in this case), but it’s not guaranteed in the general case. We can guarantee that taking the limits in either order gets us the same result if we can prove both limits have pointwise convergence and at least one limit has uniform convergence. This fact is known as the Moore-Osgood Theorem.

Pointwise Convergence

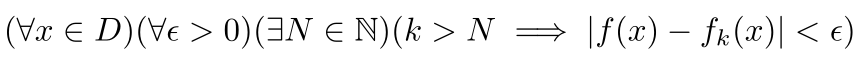

Pointwise convergence means

No matter what x you pick in the domain, the sequence of functions will converge to the value of the function at x.

We’ve proven that the h limit converges pointwise since it’s either the derivative of x raised to some rational power (which we’ve proven to converge throughout this article) or it’s the derivative of x raised to some irrational power and the derivative will be continuous. The other limit also converges pointwise since the difference between x^r and x^Rk goes to zero as k increases.

Uniform Convergence

Uniform convergence is a much stronger statement than pointwise convergence.

For all x in the domain and an arbitrary ϵ > 0, we have to pick some natural number N such that for any k after N, the difference between fk(x) and f(x) is less than ϵ.

For example, let’s say our domain is (4, 5), our r=sqrt(2), and ϵ = 0.0001. For k > 4, the difference between fk(x) and f(x) is less than 0.0001, so our N = 4. I don’t want to go through the entire proof of uniform convergence, but I can give the general overview:

- Focus only on x^r since the sum or difference of two functions that converge uniformly on the same domain also converges on the same domain.

- First, pick the x where you want to take the derivative. We’ll call this c.

- Pick a small h such that 0 is not in (c - 2h, c + 2h). At 0, the limit may not exist for irrational powers, so we don’t care.

- Let your domain be (c - 2h, c + 2h).

- Since x^r is finite over a finite domain for all finite r, the difference between x^r and x^Rk, is also finite for every point in the domain.

- Since the difference at every point is finite, there must be a least upper bound for the difference.

- Since this difference goes to zero for every point, the least upper bound must also decrease to zero.

- Since the least upper bound goes to zero, it must at some point be less than whatever epsilon you chose.

- Therefore, we’ve proven that x^Rk converges uniformly to x^r in the relevant region.

- Since fk is the difference between x^Rk at two points in the domain divided by a nonzero constant, the k limit also converges uniformly.

All Irrational Numbers

Now, if we use the Moore-Osgood Theorem, we’re done.

Q.E.D.

What About the Moore-Osgood Theorem?

On one hand, citing a theorem without proof goes against the “from scratch” part of the title. On the other hand, this article is intended for high school to early college-level calculus students and real analysis can get quite intense. As a compromise, I’m leaving this article as is, but I will link to the article I wrote proving the Moore-Osgood Theorem and the two articles I wrote explaining the background needed to read the proof.

- What is a Limit, Really?, which establishes what a limit is formally and how you can use nets to generalize limits.

- Uniform and Pointwise Convergence, which explains the difference between the two and how to prove them.

- When Can You Switch Limits in Calculus?, which shows my proof of the Moore-Osgood Theorem.

By separating things out into multiple articles, students aren’t overwhelmed with an hour-long article that goes into the depths of real analysis and I can maintain the “from scratch” part of the title.

Conclusion

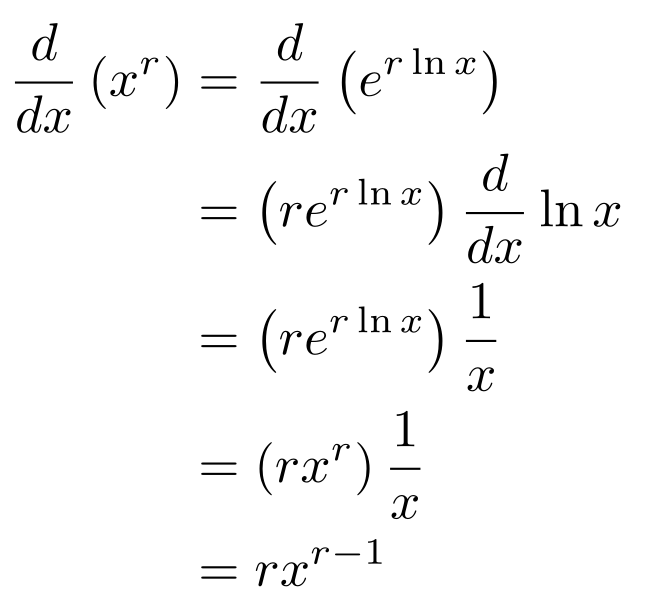

If we had allowed ourselves to use the derivative of e^x and ln x, we could have used the proof

If we wanted, we could have calculated these derivatives from the definitions of e^x, ln x, and the chain rule. Either way, we would end up proving the power rule from scratch. I prefer the proof presented in this article for several reasons:

- Students don’t learn about derivatives of exponential or logarithmic functions until the latter half of the class.

- We also prove the power rule and use it in the main proof.

- This proof provides an example of proof by induction.

- It also introduces the proof techniques of adding zero and multiplying by one.

- We also build up the real numbers from the irrational numbers, which we build up from the integers, which helps us see how these fields relate to each other.

- If you wanted to prove the power rule, I doubt your first thought would be to rewrite it with functions with derivatives you don’t know. Instead, you might try a proof like the one in this article. Students tend to see math as a list of random facts to memorize with little to no connection between them. The exponential proof can reinforce that mindset.

If you have anything else you would like me to prove from scratch, leave a suggestion. I will neither prove unsolved problems (e.g. Navier-Stokes Smoothness and Uniqueness Conjecture) nor theorems that require hundreds of pages to prove (e.g. Fermat’s Last Theorem).