How Geometry and Combinatorics Tame the Networks in your Brain

Physical Constraints Regulate Information Dynamics

Everything the human brain is capable of is the product of a complex symphony of interactions between many distinct signaling events and myriad of individual computations. The brain’s ability to learn, connect abstract concepts, adapt, and imagine, are all the result of this vast combinatorial computational space. Even elusive properties such as self-awareness and consciousness presumably owe themselves to it. This computational space is the result of thousands of years of evolution, manifested by the physical and chemical substrate that makes up the brain — its ‘wetware’.

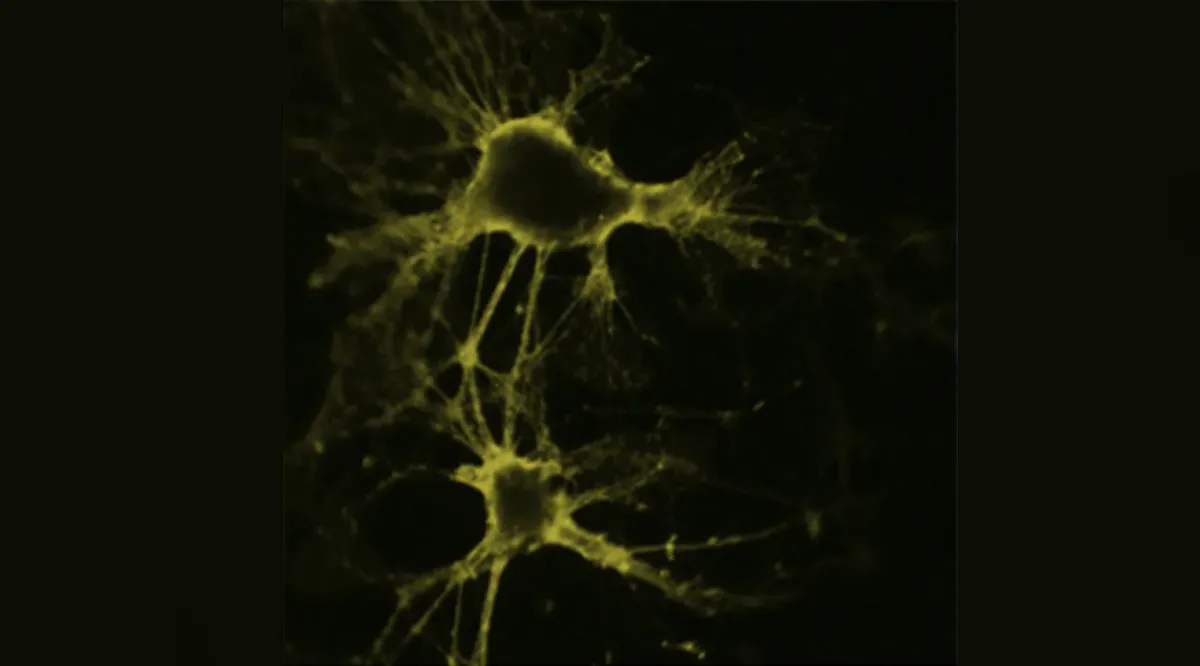

Physically, the brain is a geometric spatial network consisting of about 86 billion neurons and 85 billion non-neuronal cells. There is tremendous variability within these numbers, with many classes and sub-classes of both neurons and non-neuronal cells exhibiting different physiological dynamics and morphological (structural) heterogeneity that contribute to the dynamics. A seemingly uncountable number of signaling events occurring at different spatial and temporal scales interacting and integrating with each other are responsible for the brain’s computational complexity and the functions that emerge.

Despite a size that is intuitively difficult to grasp, this computational space is finite. It’s limited by the the physical constraints imposed on the brain, the result of the physical substrate that makes up its wetware. Taking these constraints into consideration can guide the analyses and interpretation of experimental data and the construction of mathematical models that aim to make sense about how the brain works and how cognitive functions emerge. The most basic such constraint is a fundamental structure-function constraint imposed by the interplay between the spatial geometry and connectivity of brain networks. This constraint regulates the dynamic signaling events that neurons use to communicate with each other.

In fact, this fundamental structure-function constraint is so important, that along with my colleagues Alysson Muotri at the University of California San Diego and Christopher White at Microsoft Research, we recently proposed that any theoretical or computational model that makes claims about its relevance to neuroscience and how the brain works must be able to account for it. Even if the intent and original construction of the model do not not take this fundamental structure-function constraint into consideration.

Another fundamental constraint are energy constraints imposed on the brain. The brain has evolved algorithms and tricks to make the most of the energy it has access to. It does this because it needs to. The brain uses a lot of the total metabolic energy produced by the body, but in absolute terms it is nothing compared, to say, a high performance computing system. It uses about as much energy as that needed to run a dim lightbulb. But more than the absolute energy limitations imposed on the brain, we have the most to learn about its ability to optimize its performance in the face of such limitations. Energy constraints and the strategies the brain has evolved to optimize the resources available to it are important considerations we should also take into account in order to understand the brain’s engineering design and algorithms.

If we are able to understand how these constraints determine and regulate dynamic signaling and information flows at different scales of brain networks — from individual neurons as mini-networks, to networks of neurons, to networks of brain regions — we will begin to understand the brain from an engineering systems perspective in ways we simply don’t yet. This is easy to say, but much harder to do and fill in the details. In truth, engineers, physicists, neuroscientists, and mathematicians, each bringing to bare a different perspective and complimentary toolsets, are collectively just beginning to scratch the surface. This approach currently drives much of our lab’s own research and work.

A fundamental constraint imposed by graph geometry, connectomics, and signaling dynamics

The fundamental structure-function constraint is the result of the interplay between anatomical structure and signaling dynamics. The integration and transmission of information in the brain are dependent on the interactions between structural organization and signaling dynamics across a number of scales. This fundamental structure-function constraint is imposed by the geometry — the connectivity and path lengths — of the structural networks that make up the brain at different scales, and the resultant latencies involved with the flow and transfer of information within and between functional scales. It is a constraint produced by the way the brain is wired, and how its constituent parts necessarily interact, e.g. neurons at one scale and brain regions at another.

Consider for example the cellular network scale. Individual neurons are the nodes in the network, connected by geometrically convoluted axons of varying lengths. Discrete signaling events called action potentials travel between neurons. Arriving action potentials at a receiving neuron add with each other until a threshold is reached, which then causes that neuron to fire and send out its own action potentials to the downstream neurons its connected to. Action potentials though aren’t instantaneously fast, they travel down axons at finite signaling speeds — their conduction velocities — which are dictated by the biophysics of the cell membranes that makes up the axon portion of the neuron. The physical path lengths (geometry) of the axons aren’t straight lines though. They are convoluted; twisting and turning. This creates latencies in the signals encoded by action potentials arriving at downstream neurons, which in turn affect the timing of their contributions to the summation towards reaching the threshold.

From a combinatorial perspective, consider also that the timing and summation of action potentials at one specific neuron is occurring independently from all other neurons and action potentials. There are order of magnitude 10 quadrillion connections between neurons in an adult human brain, every single one of them essentially doing their own thing unaware and not caring what the rest are doing. And as if this wasn’t complicated enough, neurons receiving signals can be in a refractory state that doesn’t allow them to respond to incoming action potentials, further amplifying the effect that the timing of arriving signals (driven by the geometry of the network and signaling latencies) will have on the overall computations of the brain. The subtle interplay between these competing effects, expressed mathematically in a quantity called the refraction ratio, can have a profound on the dynamics of the brain. In fact, we have shown that individual neurons seem to optimize the design of their shapes to preserve this refraction ratio.

From a more general information theoretic perspective, these principles apply not jut to biological neural networks, but any physically constructible network. The networks that make up the brain are physical constructions over which information must travel. The signals that encode this information are subject to processing times and signaling speeds (i.e. conduction velocities in the case of the brain) that must travel finite distances to exert their effects — in other words, to transfer the information they are carrying to the next stage in the system. Nothing is infinitely fast. The same concepts will apply to any constructed physical network, whether it is the brain or not.

Importantly, when the latencies created by the interplay between structural geometries and signaling speeds occur at a temporal scale similar to the functional processes of the network, as is the case in the brain, the interplay between structure and geometry and signaling dynamics has a major effect on what that network can and cannot do. You cannot ignore it. So unlike turning on a light switch, where the effect to us seems instantaneous and we can ignore the timescales over which electricity travels, the effect of signaling latencies imposed by the biophysics and geometry of the brain’s networks have a profound effect on its computations. They determine our understanding of how structure determines function in the brain, and how function modulates structure, for example, in learning or plasticity.

The effect geometry can have on network dynamics: A biological neural network modeled as a graph

The dramatic effect the structure-function constraint can have on network dynamics can be easily illustrated with a numerical simulation of a geometric biological neural network. If there is a mismatch in the balance between network geometry and network dynamics — in the refraction ratio — then network dynamics and its ability to process information can completely break down, even though the network itself remains unchanged.

In numerical experiments, we stimulated a geometric network of 100 neurons for 500 ms and then observed the effects of modifying the refraction ratio by modifying the signaling speed. The results are summarized in the figure below. While leaving the connectivity and geometry (edge path lengths) unchanged, we tested three different signaling speeds (action potential conduction velocities) at 2, 20, and 200 pixels per ms. We then observed the network to see how well it was able to sustain inherent recurrent signaling in the absence of additional external stimulation. At the lowest signaling speed, 2 pixels per ms, after we stopped driving the network externally, we saw recurrent low-frequency periodic activity that qualitatively resembled patterns of activity seen in certain parts of the brain and spinal cord. When we increased the speed to 20 pixels per ms, there was still some recurrent activity but it was more sporadic and irregular. Not really patterns you would actually see in a typical brain. At 200 pixels per ms the entire activity of the network just dies. There was no signaling past the half second stimulus period. This is the direct consequence of a mismatch in the refraction ratio: when signals arrive to quickly at downstream neurons, the neurons have not had enough time to recover from their refractory periods and the incoming signals do not have an opportunity to induce downstream activations. The activity in the entire network dies.

A systems engineering understanding of the brain: Constraint-based modeling of experimental systems

Ultimately, the impact constraint-based modeling will have on our understanding of the brain as an engineered system will be realized when we apply it to experimental data and measurements about the brain.

In particular, individualized human-derived brain organoids are a potentially unique experimental model of the brain because of their putative relevance to human brain structure and function. Brain organoids are likely a prime experimental model of the brain capable of guiding the development and testing of constraint-based mathematical models. One of the unique features of organoids is their ability to bridge mechanistic neurobiology with higher level cognitive properties and clinical considerations in the intact human. Including potentially consciousness.

And while direct relevance to the human brain will be variable, other experimental models offer the opportunity to ask specific questions that take advantage of each model’s unique properties which organoids might not share. There are likely aspects of neurobiology that are invariant to taxa, and considering them in the context of what also happens in an organoid could establish an abstraction about the the brain’s wetware. For example, the deterministic and reproducible neurobiological connectivity of the worm C. elegans across individuals, or the opportunity to observe and measure the physiology and metabolic properties of intact zebra fish during behavioral experiments. The mathematics used for modeling different experimental systems is agnostic to the neurobiological details, and fundamental constraints such as the structure-function constraint or energy considerations are universal. Rigorous constraint-based mathematical models that integrate data across experimental models offer the opportunity to generate insights about how the brain works that no single approach by itself can produce.