What is Fractional Calculus?

“A paradox from which one day useful consequences will be drawn.” ~Gottfried Leibniz

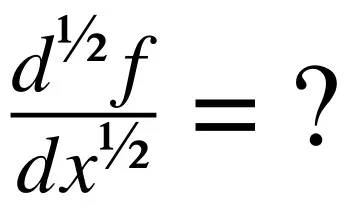

Differential calculus was invented independently by Isaac Newton and Gottfried Leibniz and it was understood that the notion of the derivative of nth order, that is, applying the differentiation operation n times in succession, was meaningful. In a 1695 letter, l’Hopital asked Leibniz about the possibility that n could be something other than an integer, such as n=1/2. Leibniz responded that “It will lead to a paradox, from which one day useful consequences will be drawn.” Leibniz was correct, but it would not be centuries until it became clear just how correct he was.

This article will investigate the question of what it could possibly mean to do something like take a 1/2 order derivative, and so introduce the theory of the fractional calculus.

Intuition

There are two ways to interpret the expression

The first is the one we all learn in basic calculus: it’s the function that we get when we repeatedly differentiate f n times. The second is more subtle: we interpret it as an operator whose action on the function f(t) is determined by the parameter n. What l’Hopital is asking is what the behavior is of this general operator when n is not an integer.

The most natural way to answer this question is to interpret differentiation and integration as transformations that take f and turn it into a new function. Therefore, we are looking for an operator that continuously transforms f into its nth derivative or antiderivative.

The fractional integral and derivative

The most natural place to start our search for fractional-order differential and integral operators is with a formula called Cauchy’s formula for repeated integration. If we repeatedly take the nth order antiderivative of a function, then the result is:

The generalization of the factorial function is the gamma function. If we note that Γ(n) = (n-1)! then the obvious way to generalize Cauchy’s formula to include real order α (strictly greater than zero) is

And indeed, this is a valid operator for integration to fractional order. It’s called the left Riemann-Liouville integral. We will discuss the purpose of the “left” qualifier later. There are in fact many different fractional integration operators that are accepted in the literature, but the R-L integral is the simplest and easiest to use and understand. Note that α may also be complex with real part strictly greater than zero, for simplicity we will assume that α is real. The special case of α = 1/2 is called the semi-integral.

R-L integration obeys the following important relations:

One might naively assume that we are done, and that we can simply define fractional differentiation to order α by

Not so. The problem (well, one of the problems) is that the gamma function is not defined for zero or for negative integers, which would leave us with a generalization of differentiation that didn’t even work for regular differentiation! We will have to be creative to find a way around this.

Let’s first note that taking the derivative n times after integrating n times is equivalent to the identity operation:

This means that the derivative is the left-inverse of the integral. However, integration is not the left-inverse of the derivative because integration adds an arbitrary constant. That is, it is not generally true that:

With this in mind, we expect the fractional derivative to order α to have the property that:

We’d also, obviously, like to be able to write the fractional derivative in terms of operators that we understand. We understand differentiation to integer order and we understand integration to integer and non-integer order. An operator that we can construct out of these component operators that has the desired left-cancellation property is:

Where ⌈α⌉ is called the ceiling function of α, the result of rounding up α to the next integer. We find that this is in fact the correct operator, and written out in full it looks like:

This is the left Riemann-Liouville fractional derivative. One can clearly understand by looking at that beast why it took nearly 300 years for this field of research to go anywhere: most computations in fractional calculus are tedious if not utterly intractable if done by hand without the aid of a computer. The special case of α = 1/2 is called the semiderivative.

Using the fractional integral and derivative that we’ve developed, we can now combine them piecewise to define the differintegral operator:

The following animation shows how the Riemann-Liouville differintegral continuously transforms between the functions f(x) = x, f(x) = 1, and f(x) = (1/2)x²:

Observe how, from values of α ranging from -1 to 1, the differintegral shown by the green curve sweeps between the line y = 1 and the curve y = (1/2)x².

Properties

It is always good to be curious about what happens when you try to do something weird like plug a fraction in for the order of differentiation, since that is of course how many important discoveries are made, but when heading into uncharted territory one should be prepared to abandon much of what you already know and take for granted as natural and obvious.

Which is basically a trite way of saying that many of the basic properties of ordinary derivatives and integrals that we are all familiar and comfortable with, like the chain and product rules, do not hold in general with fractional derivatives and integrals, or they take on complicated forms. However, the R-L integral and derivative that we have discussed are not the only possible differintegral operators, in fact there is an entire universe of different ways to generalize differentiation and integration to non-integer orders and it is possible to do so in ways that preserve many of the classical properties. However, we’re focusing on the R-L operators in this article because they, along with the closely-related Caputo operators, are the easiest to understand and the most common in applications.

Another interesting property of the RLFD is nonlocality. When we compute the value of an integer-order derivative at a point, the resulting value depends only on that point. This seemingly obvious property is called locality. Things are different with the fractional derivative. The fractional derivative is obtained by integrating over an entire range of values, and there is a nontrivial dependence on the lower bound of the integration, so that we should properly have written out the fractional derivative as:

The case where a=0 is common when analyzing physical systems, because often the dependent variable is time, and the fractional derivative at any given time will depend on the state of the system at all previous times, that is, all instants of time since the start of the experiment at t=0.

This nonlocality is one of the main drivers of interest in fractional calculus in applications. There are many interesting physical phenomena that have what are called memory effects, meaning that their state does not depend solely on time and position but also on previous states. For instance, one can imagine an electrical circuit component whose resistance depends on all of the charge that has passed through it over a fixed length of time. Systems with memory effects can be very difficult to model and analyze with classical differential equations, but nonlocality gives fractional derivatives a built-in ability to incorporate memory effects. Fractional calculus could therefore prove to be a very useful tool for analyzing this class of systems.

Nonlocality is also the reason why we have to be careful in specifying that we are discussing the left RLFD. One can also switch the order of integration to define the right fractional derivative:

The right RLFD is a fundamentally different object than the left one despite the similar appearance. Right fractional derivatives haven’t been studied as much, and they are not as useful in the applied setting. To understand why, consider what the nonlocality property meant in the left RLFD case: it meant that the state of a physical system depended on its state at previous times. If a right RLFD described a physical system, then the state of that system at a given time would depend on its future state, which is not physically reasonable. Since most research into fractional calculus is focused on applications, right fractional derivatives are mostly interesting to theorists for now.

Fractional derivatives of some basic functions

For power functions with n≥0, the fractional derivative is:

By checking the n=0 case, we can see that this implies that the fractional derivative of a constant, surprisingly, is not zero. The semiderivative of f(t)=1, constant, is worth memorizing and is given by:

For the sine function:

This is the case that most strongly supports our claim that the fractional derivative can be thought of as a transformation between functions and their derivatives. Changing α simply causes the phase to advance, until at α=1 we get the cosine function.

Lastly, for the exponential function:

Which, as with the sine function, is exactly what we would expect.

Interpretation

It’s not yet clear how we should interpret fractional operators geometrically and physically in the same way we do with operators in classical calculus. This is an area of active research and when this problem is solved it will likely lead to great results in physics and engineering.

In the meantime, the easiest thing to do is to take the approach followed by Oliver Heaviside when he encountered fractional operators while developing his operational calculus: just accept that they exist as a class of objects in their own right and that they follow a particular set of rules, and if you ever happen to encounter something that follows those rules or have need of something that follows those particular rules, then you know what to look for.

The tautochrone problem

Neils Abel (1802–1829) is generally credited as being the first mathematician to develop the basic ideas of fractional calculus when he was analyzing the tautochrone problem. The tautochrone problem asks one to construct a curve with the property that when a bead slides down the curve, the time it takes to reach the bottom of the curve is independent of the initial height.

Abel used basic physical reasoning to arrive at the following integral equation, which relates the time to reach the bottom of the curve to the initial height:

Where s is the arc length parameterization of the curve that solves the problem. We need to solve this equation for ds/dy. We could solve this problem using convolutions and Laplace transforms, as Abel did. Alternatively, we could shortcut all of that and recognize that the expression on the right can be divided by Γ(1/2) = √π to turn it into a semi-integral. Divide each side of this equation by √π and move the √(2g) to the left to obtain, and let T(y0) = T0 since the fall time is a constant with respect to the initial height:

We know how to cancel the semi-integral operator. Just take the semiderivative of each side of this equation, and the problem is immediately solved:

The curve described by this equation (a cycloid, incidentally) is called the tautochrone curve.

This problem illustrates the main use case of fractional calculus as things currently stand. Normally, what happens is that when analyzing a system we coincidentally encounter a mathematical statement that happens to be a fractional operator and therefore we know that we can apply the rules of fractional operators to that system.

Conclusion

One of the greatest ways to make discoveries in math and science is to see what happens when we break the rules by trying to make our existing theories work with extreme or unusual cases. (I alluded to this in a previous article). Often that doesn’t go anywhere because sometimes rules exist for a reason, but sometimes we get a great answer when we ask a ridiculous question. This is certainly one of those times.

As always, I appreciate any corrections.